There are there ways to remove duplicates from flat file,

1)Using unix commad in session.

2)Using Dynamic look up feature of look up.

3)Using Sorter transformation

Using Sorter transformation is the easiest way to remove duplicates.But you need to compromise with performance if you are loading huge amount of data.

If you loading huge amount of data it is better to use dynamic look up.

Now lets discuss how to do it first using sorter,

All you have to do is,import sorter transformation in mapping and enable the distinct property in the properties tab of sorter.

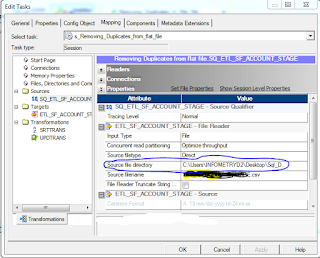

Then in the Session give the flat file path in the 'source file directory'.

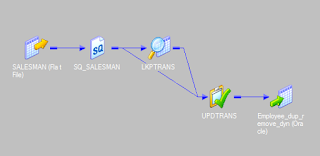

Please follow the below images,

One more thing you need to observe here.Sorter was passive transformation before distinct option was enabled.But after you enabled the distinct it became active.

2.Removing duplicates using Dynamic lookup,

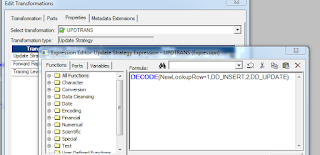

When you enable dynamic lookup property in lookup it will create NewLookUpRow.

New Look up port is having the values:-

0 The PowerCenter Server does not update or insert the row in the cache.

1 The PowerCenter Server inserts the row into the cache.

2 The PowerCenter Server updates the row in the cache.

0 The PowerCenter Server does not update or insert the row in the cache.

1 The PowerCenter Server inserts the row into the cache.

2 The PowerCenter Server updates the row in the cache.

Find out how to find Dupliciates in Flat Files

ReplyDeletehttps://www.youtube.com/watch?v=Q6o9hTewyxw

:)

ReplyDeletegörüntülü

ReplyDeleteucretli show

İD28J

شركة تنظيف سجاد بالجبيل 2JKbsj7SyS

ReplyDeleteشركة تنظيف مجالس بخميس مشيط Mcd6y9gjHv

ReplyDelete